What 100 + Feedback Calls Taught Us About Building an AI-Powered BPO

Over eight weeks, we invited 100+ customer-experience leaders, operations directors, and...

Contributors

TL;DR:

Over eight weeks, we invited 100+ customer-experience leaders, operations directors, and technology buyers to scrutinise our vision of an AI-driven BPO.

The conversations—30 to 45 minutes apiece—were frank, detailed, and occasionally eye opening. Out of them came five core takeaways that reshaped how we build, sell, and scale this thing. We’ve left out the deck-polishing advice (you’ve heard enough about slide clarity).

What follows is the substance:

- How the tools need to work

- Why they matter

- What it will take to land them in the real world.

“Opening it up to a broader audience generates more value.”

Key Takeaway 1 - SmartAgent = On-the-job coaching wrapped inside every reply:

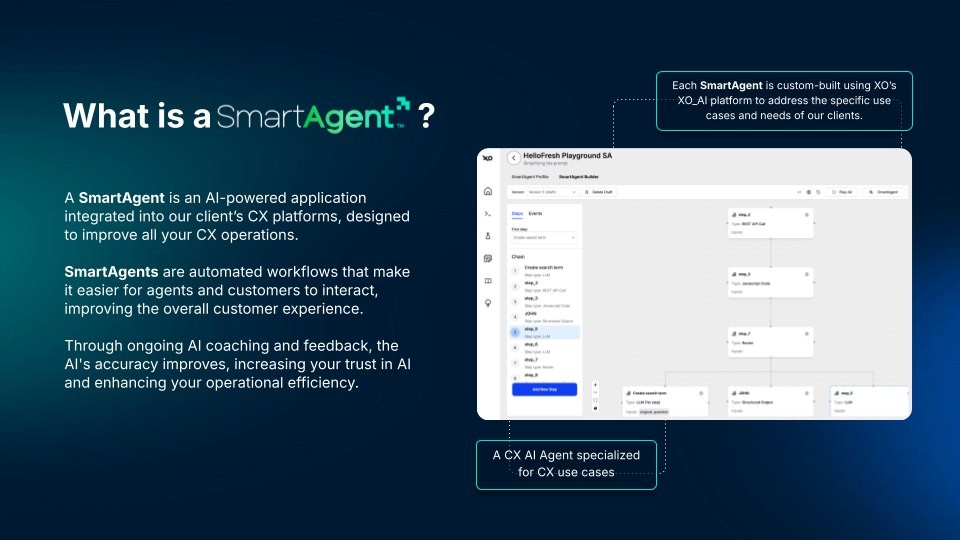

The main topic that grabbed everyone’s attention was SmartAgent Copilot, our real-time agent-assist layer.

Leaders understood the surface value quickly: The tool watches the context of a ticket or a call, drafts a compliant response, and lets the agent accept, edit, or reject.

But what caught more attention than anticipated was a secondary effect:

Every suggestion doubles as a microscopic lesson for agents that embeds policy details without a lecture hall.

“Every response that an AI gives an associate is a micro-learning.”

- Workforce Planning Leader

This had an immediate impact for seasonal businesses and high-growth brands, who felt the benefit most keenly.

A Retail VP managing thousands of Christmas temps described how junior agents shortened ramp-up by days because, as he put it: “the policy lives in the draft.”

“The policy lives in the message draft” - VP of CX

The effect extended beyond the agent experience.

QA & Coaching leaders liked that every choice an agent makes—accept, tweak, discard—lands in a feedback ledger that surfaces ambiguous policy lines. Instead of guessing why edits occur, coaches can jump straight to the source guideline, tighten it, and see the impact of the next shift.

That transparency turned sceptics into allies; one veteran supervisor admitted he expected “another black-box suggestion engine” but left convinced that the audit trail “turns coaching into something evidence-based rather than anecdotal.”

“It shows you exactly which sentences get changed and why. That’s the first time I’ve seen AI help the QA team as much as it helps the agent.” - CX Supervisor

By the end of each session the lingering concern was not about capability but about governance:

- How often should the underlying model refresh?

- Who signs off on new brand intents?

- How do exceptions propagate?

The consensus landed on a cadence aligned to business rhythm—weekly for fast fashion, monthly for regulated finance—proving that a Copilot must flex to industry tempo - move at the speed of the business -not the other way round.

Key Takeaway 2 - Voice of the Customer, from static dashboards to conversational discovery

If Copilot centred on agent productivity, Voice of the Customer (VOC) pivoted the conversation to managerial insight.

Participants immediately compared VOC (a method that collects and analyzes customer feedback) to large-language model chatbots but were quick to point out its unique advantages: a comprehensive data collection and the ability to verify its findings.

“Do I think of this as ChatGPT fed by every customer call? Exactly.”

This framing was common. But beyond the LLM comparison, leaders valued what VOC actually unlocks: a conversational interface that lets them ask pointed questions—“Why are VIP cancellations spiking in EMEA?”—and receive narrative answers tethered to precise conversations

While dashboards show how many and how much, VOC shows why things happen.

“This is a reporting tool that gives qualitative insight. Dashboards already cover the quantitative.”

As VOC gained traction in the conversation, trust surfaced as the non-negotiable prerequisite. Security chiefs pressed hard on provenance:

“How do I believe it’s the right content if I can’t jump to the source?”

The ability to click a claim and see the original conversation—for many, a ten-second reality check—neutralised most doubts.

Once credibility was in place, the conversation advanced to ambition.

Analysts wanted VOC not merely to answer isolated “why” questions but to assemble those answers into end-to-end journeys that reveal breakpoints across channels.

“What nobody is doing is streaming all that together into a journey you can turn into a roadmap.”

In short, people saw that VOC could do more than just answer single questions. It could connect all the answers to show the whole customer journey and where things go wrong.

Leaders thought about using these customer journey maps in their quarterly meetings instead of just showing numbers. This would let them use real customer experiences to explain why they need to make changes, instead of just guessing.

Key Takeaway 3 - Value Proposition, Differentiation and Aligned Incentives

AI “partnerships” now populate every BPO brochure, so leaders demanded clarity on what sets an integrated model apart from the standard labour-contract + third-party SaaS bundle.

“I can pull up a hundred ‘AI-powered BPOs.’ Show me why you’re different.”

The answer that resonated was incentive alignment.

When the same provider owns both the people and the platform, there is no tug-of-war between seat hours (the BPO metric) and licence seats (the software metric).

“You’re coming from a BPO angle but adding AI on top, so you hit the right balance.”

What further strengthened this case was evidence of blended staffing — human specialists supported by AI suggestions –proved persuasive.

One skeptic, after walking through a travel-industry example, shifted his way entirely:

“I started ready to poke holes, but you’ve got a stronger model if those figures hold.”

Just as important, the integrated approach also sidestepped antagonistic renewal cycles—one of the pain points in traditional BPO + SaaS setups.

Instead of renegotiating licences separate from headcount, clients gained a single commercial rhythm anchored to operational outcomes—a simplification every finance team welcomed.

At XtendOps, we’re not replacing people with AI—we’re building a model where agents train it, shape it, and keep it real. That’s how you avoid the disconnect between tech promises and frontline reality

Key Takeaway 4 - For Implementation and Operations, Leverage Context and Keep Changes to a Minimum

Veteran operations leaders, wary of over-promised plug-ins, focused on how the AI tools would integrate with their existing workflows.

“I want guests to get the same great experience no matter which centre they reach.”

Their priority was ensuring a consistent customer experience across all contact centers. To get there, leaders emphasized the value of leveraging existing BPO knowledge—macros, templates, and escalation paths—as the foundation for training and refining the AI model, which would also enhance the plug-in's reliability.

System compatibility also emerged as a top concern.

Leaders wanted to avoid complex, multi-year implementations.

One support-systems manager highlighted the benefit of AI's ability to scan all interactions to identify issues, including incorrect agent categorization.

“I’d rather have AI scan everything and just tell us what’s happening—including when agents pick the wrong category.”

Training came up frequently as well.

Learning leaders advocated for a model deployment approach similar to agent onboarding, with clear objectives, measurable milestones, and rapid feedback loops.

“To do it right, you actually have to be tighter with the information, not looser.”

The scope of deployment was also a key consideration.

Many enterprises with both in-house and outsourced teams inquired whether the AI toolkit could be extended to their internal agents. Avoiding a two-tier system was crucial to maintaining data consistency and team-wide adoption.

Finally, the option to deploy the AI tools across multiple BPO vendors was seen as a valuable way to mitigate single-supplier risk, while keeping standards consistent.

A leader, who manages a multi-center operation, summarized this succinctly:

“Consistency first; vendor politics second.”

This is the model behind our operational rollout: AI is layered onto existing CX workflows—not forced through one-size-fits-all platforms. Teams are structured for AI, agents stay central, and business reviews shift from reporting to creating SmartAgents.

Key Takeaway 5 - Go-to-Market & Commercial Strategy: Start with Pain, Finish with a Single Line Item

Even the sharpest solution falls flat if the story misses the mark.

That was the clear message from investors, marketers, and former founders: lead every pitch with the buyer’s pain—quantified in their own terms.

“The problem statement changes for every organisation you meet.”

That means starting with the AHT spike in healthcare, the refund backlog in fashion, or the repeat-contact headache in logistics—whatever keeps that buyer awake.

From there, conversations naturally forked into two narrative streams: operations heads lean into Copilot’s impact on efficiency, while analytics teams gravitated toward VOC’s root-cause discovery.

A SVP of CX suggested maintaining clearly separated decks:

“Have two very different pitches for the copilot versus the customer voice.”

Procurement teams, meanwhile, pushed for contractual simplicity.

The term “Augmented Agent Pricing” gained traction—a seat rate familiar to finance teams, but with AI fully baked in.

A decision maker pointed out the benefit in plain terms:

“It feels like buying standard BPO support, except the agents show up with superpowers.”

That single line item eliminated secondary vendor reviews, shortened approval cycles, and made total cost of ownership more transparent.

Appendix material—security certifications, architecture diagrams, testimonial clips—still matters, but only after the economics make sense.

One enterprise architect put it bluntly:

“Have your visuals ready so when they ask, you ping them straight to the answer.”

Finally, one unexpected benefit of broadening the feedback loop was narrative clarity. Involving adjacent teams—legal, marketing, finance—surfaced objections early and sharpened the story.

An ops leader comment captured the value of widening the lens:

“Opening it up to a broader audience generates more value.”

Putting It All Together

Hours of conversations reduced to a simple equation:

AI that lives where the work happens, is measured in the metrics buyers already track, and is purchased through a contract that aligns every incentive – earns trust quickly.

That trust carried through each take away.

SmartAgent Copilot resonated because it merges productivity with in-flow learning—turning every suggestion into a coaching moment.

Voice of the Customer resonated because it turns conversation exhaust into strategy you can click to verify.

And the AI BPO Model resonated because it unifies people, platform, and accountability—eliminating the typical friction between tools and teams.

Moving forward, we will broaden the scope of our feedback calls to delve deeper into commercial terms, deal structures, and implementation preferences of prospective buyers.

This will complement the product-specific insight already gathered and help shape a model that scales in the real world.

We’re shaping how AI shows up in real operations—not just in theory, but in practice. Curious to see more? Let’s connect.

More to come. Stay tuned!